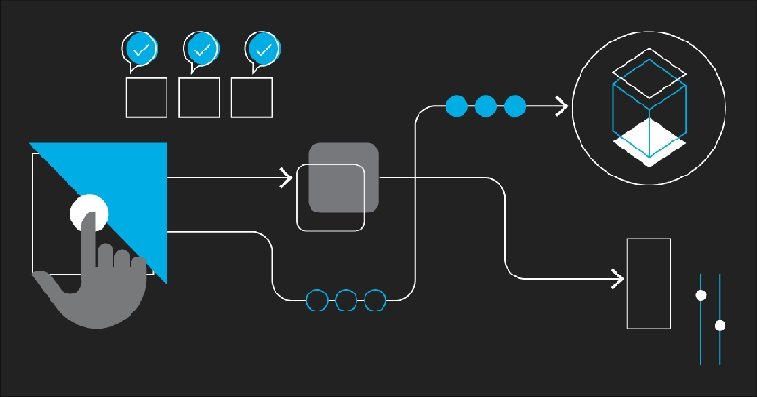

What is Jenkins? Jenkins offers efficient tools for orchestrating pipelines to automate intricate processes and ensure efficient software delivery by developers. Jenkins Pipeline Orchestration involves overseeing and automating a series of tasks or jobs using the Jenkins CI/CD tool. Jenkins Pipeline is a collection of plugins that assist in creating and incorporating continuous delivery pipelines in Jenkins.

In this blog, let us explore advanced techniques for Jenkins pipelines. Adopting these strategies, teams can create scalable, maintainable pipelines that enhance software development and deployment efficiency to ensure smooth integration and delivery. This blog also offers practical insights to advance your Jenkins skills to improve builds, optimize testing, or automate deployments.

Advanced Jenkins Pipeline Techniques for CI/CD Orchestration

Advanced Jenkins Pipeline techniques can easily enhance your CI/CD orchestration and make your development process more efficient, strong, and flexible. Here are some of the advanced Jenkins pipeline techniques to automate complex CI/CD workflows and ensure efficient, scalable, and maintainable software delivery.

Declarative vs. Scripted Pipelines

Jenkins pipelines use two syntax styles: Declarative and Scripted. Knowing their differences helps you choose the best approach for your CI/CD workflows.

Declarative Pipelines offer a structured syntax for simplicity and provide a clear and concise way to define pipeline stages with built-in features like post blocks for specifying actions based on pipeline outcomes, and when conditions to control stage execution. Declarative pipelines are perfect for straightforward CI/CD tasks and are more restrictive in syntax to reduce complexity and make them easier to maintain.

Scripted Pipelines use Groovy scripting for greater flexibility and complex logic. They are more powerful and permit intricate control structures like loops and conditionals but this added flexibility can increase complexity. Scripted pipelines are ideal for scenarios where custom logic or advanced orchestration is required.

Consider the following for deciding the suitable approach

- If your pipeline is straightforward and you value simplicity and maintainability, a declarative pipeline is likely the best choice.

- If your pipeline requires complex logic, advanced scripting capabilities, or custom Groovy code, a scripted pipeline provides the flexibility you need.

Pipeline Stages and Parallelism

Pipeline orchestration in Jenkins involves dividing your CI/CD process into distinct stages for better organization and parallel execution of tasks. This approach enhances pipeline maintainability and efficiency by logically segmenting workflows.

Stages are used to group pipeline activities into manageable segments. For example, a pipeline includes stages for “Build”, “Test”, and “Deploy”. Each stage can contain multiple steps such as checking out code, running builds, executing tests, or deploying artifacts. You gain clarity on the flow of tasks and can isolate potential issues more easily by organizing your pipeline into stages.

Parallelism in Jenkins allows you to execute multiple stages concurrently for significant time savings when tasks can run independently. This technique is useful in large projects with multiple test suites where parallel execution can drastically reduce the overall pipeline execution time.

For example, you can run unit tests and integration tests simultaneously to reduce feedback loops. Parallelism also provides concurrent deployments to multiple environments which is valuable for complex deployment scenarios and scaling. You can improve pipeline efficiency and expedite the software delivery process by incorporating parallelism.

Here’s an example pipeline script demonstrating the use of stages and parallel execution:

| pipeline { agent any stages { stage(‘Build’) { steps { // Build step logic } } stage(‘Test’) { parallel { stage(‘Unit Tests’) { steps { // Unit tests logic } } stage(‘Integration Tests’) { steps { // Integration tests logic } } } } } } |

In this example, the Test stage contains two parallel sub-stages called Unit Tests and Integration Tests. The pipeline’s execution time is reduced for faster feedback and continuous delivery by executing these stages in parallel. This technique is useful for large projects with multiple test suites or complex deployment scenarios.

Cloud platforms like LambdaTest can be integrated with Jenkins pipelines to offer scalability and flexibility. Jenkins provides plugins and integrations to utilize cloud testing resources for dynamic agent provisioning and automated deployments. This integration allows pipelines to utilize cloud-based infrastructure for tasks like on-demand builds, automated testing, and deployments to cloud environments.

LambdaTest is an AI-powered test orchestration and execution platform that offers a Jenkins plugin as a valuable tool to accelerate your automated cross-browser testing. By integrating the LambdaTest Jenkins plugin, you can seamlessly automate your Selenium test scripts by connecting your Jenkins CI instance to the LambdaTest Selenium grid. This grid provides access to an extensive library of over 3000 web browsers online, allowing you to achieve higher test coverage while conducting automation testing with the Selenium test suite.

Pipeline Environment Variables and Parameters

Environment variables and parameters are key elements in Jenkins pipelines that provide customization and flexibility for a variety of CI/CD tasks. Let’s explore their distinct roles and usage.

Environment Variables

Environment variables are global variables that can be accessed throughout the pipeline. They offer a way to define consistent values across different stages for easier management of common configurations. Environment variables can be set at the start of the pipeline or within specific stages depending on the scope of their use.

In the example, an environment variable APP_NAME is set to a specific application name to allow its value to be reused in multiple places within the pipeline:

| pipeline { environment { APP_NAME = ‘MyApp’ } stages { stage(‘Build’) { steps { echo “Building ${APP_NAME}” // Build step logic } } stage(‘Deploy’) { steps { echo “Deploying ${APP_NAME} to production” // Deployment step logic } } } } |

Pipeline Parameters

Pipeline parameters allow for dynamic input when triggering a pipeline manually or through automation tools. This makes pipelines adaptable to different contexts such as deploying to different environments or providing custom build parameters.

Here’s an example where a parameter ENV is defined to specify the deployment environment:

| pipeline { parameters { string(name: ‘ENV’, defaultValue: ‘dev’) } stages { stage(‘Deploy’) { steps { echo “Deploying to ${params.ENV}” // Deployment step logic } } } } |

In this example, the ENV parameter has a default value of ‘dev’, but it can be changed when triggering the pipeline, allowing flexibility in deployment. The echo command demonstrates how parameters can be accessed and used within a pipeline step.

Combining environment variables and parameters offers a way to make Jenkins pipelines flexible, customizable, and adaptable to varying requirements. They support multiple use cases, from simple builds to complex multi-environment deployments to enhancing the versatility of your CI/CD pipelines.

Jenkinsfile from SCM

Maintaining Jenkins pipeline definitions in source control ensures they are versioned, traceable, and consistent with your codebase. This approach known as “pipeline-as-code” promotes better collaboration, easier maintenance, and improved auditability in CI/CD workflows.

Jenkinsfile

A Jenkinsfile is a text-based file containing the pipeline script stored in the root directory of a project repository. You can manage pipeline logic alongside your codebase for smooth changes, versioning, and collaboration among team members by keeping this file in source control. It also provides code review processes where changes to pipeline logic can be examined and approved just like any other code changes.

Here’s a simple Jenkinsfile example stored in a repository:

| pipeline { agent any stages { stage(‘Build’) { steps { echo ‘Building…’ // Build logic } } stage(‘Test’) { steps { echo ‘Testing…’ // Test logic } } } } |

Teams can ensure that pipeline changes are tracked and associated with specific commits by keeping this Jenkinsfile in source control. This provides traceability for CI/CD processes and allows teams to roll back to previous versions if needed.

Multi-branch Pipelines

Multi-branch pipelines automatically create a pipeline for each branch in a repository. This is useful for workflows that require building and testing multiple branches or feature sets. It simplifies the setup process as Jenkins automatically discovers new branches and configures pipelines based on the Jenkinsfile found in each branch.

Developers can create feature branches in their repository, and Jenkins will automatically generate a pipeline for each branch that allows parallel builds and tests. This approach is perfect for teams practicing feature branching or working on multiple versions of a project to provide an improved way to manage CI/CD across different branches.

Pipeline Libraries and Reusability

Jenkins supports the use of shared pipeline libraries which are repositories containing reusable pipeline code and functions. These shared libraries help reduce redundancy, promote code reuse, and maintain consistency across Jenkins pipelines, providing a centralized approach to common tasks and logic.

Shared libraries are collections of Groovy scripts and other resources that can be imported into Jenkins pipelines. They can reside in a dedicated repository or within the same repository as your Jenkinsfile in a designated folder. These libraries contain functions, steps, or even entire pipeline stages that can be used across multiple pipelines, enabling you to maintain common logic in one place.

Using Shared Libraries

To use a shared library in your Jenkins pipeline include the @Library annotation at the beginning of your Jenkinsfile, referencing the shared library by name. This imports the library’s functions and makes them available in your pipeline.

| @Library(‘my-shared-library’) _ pipeline { stages { stage(‘Test’) { steps { mySharedLibrary.runTests() } } } } |

In this example, a shared library called ‘my-shared-library’ is imported at the start of the pipeline. The runTests() function from the shared library is used within the “Test” stage, demonstrating how shared libraries can encapsulate common functionality.

Shared libraries are a way to modularize your Jenkins pipelines to manage and reuse common logic efficiently. You can enhance pipeline consistency, reduce maintenance efforts, and improve collaboration among teams working on different pipelines by implementing shared libraries.

Pipeline Notifications and Post Actions

Effective pipeline orchestration in Jenkins requires not only smooth execution but also effective communication and error handling. Post Actions and Notifications are two key features that address these needs and ensure that stakeholders are informed of pipeline outcomes and that appropriate actions are taken based on pipeline results.

Post Actions

The post block in Jenkins pipelines allows you to define actions that are triggered after a stage or pipeline completes. This block offers flexibility by enabling you to specify conditions for these actions, such as success, failure, or always. This functionality is crucial for implementing automated clean-up, error handling, or notifications based on pipeline outcomes.

In the following example, the post block is used to send Slack notifications when a pipeline stage succeeds or fails:

| pipeline { stages { stage(‘Build’) { steps { // Build step logic } } } post { success { slackSend(channel: ‘#ci-notifications’, message: ‘Build succeeded!’) } failure { slackSend(channel: ‘#ci-notifications’, message: ‘Build failed!’) } } } |

This example demonstrates how post actions can be created to respond to different pipeline outcomes.

Notifications

Notifications keep teams updated on pipeline status and results. Jenkins supports various notification methods and these notifications can be integrated into pipelines to alert relevant stakeholders about building successes, failures, or other significant events.

Other popular notification methods include:

- Email: Use the mail step to send email notifications to designated recipients based on pipeline outcomes.

- Webhook Integrations: Configure webhooks to trigger external services or send notifications to custom endpoints.

Conclusion

In conclusion, Advanced Jenkins pipeline orchestration techniques provide a flexible approach to continuous integration and continuous delivery. You can create scalable, maintainable, and efficient workflows that align with your development and deployment goals by incorporating these methods.

Techniques like parallelism, shared libraries, and pipeline environment variables offer an improved way to manage complex tasks while post actions and notifications enhance communication and error handling. Experimenting with these concepts allows you to fine-tune your Jenkins pipelines for optimal performance and reliability.